Beautiful Info About What Is The Smoothing Technique Stacked Area Plot

Naïve bayes is a probabilistic classifier based on bayes theorem and is used for classification tasks.

What is the smoothing technique. I have gone through many materials, i feel both are same. For example, consider 100 examples within our dataset, each with predicted probability 0.9 by our model. The technique was first introduced by robert goodell brown in 1956 and then further developed by charles holt in 1957.

Data smoothing can be used to predict trends, such as those found in. This allows important patterns to stand out. Please explain in simple way.

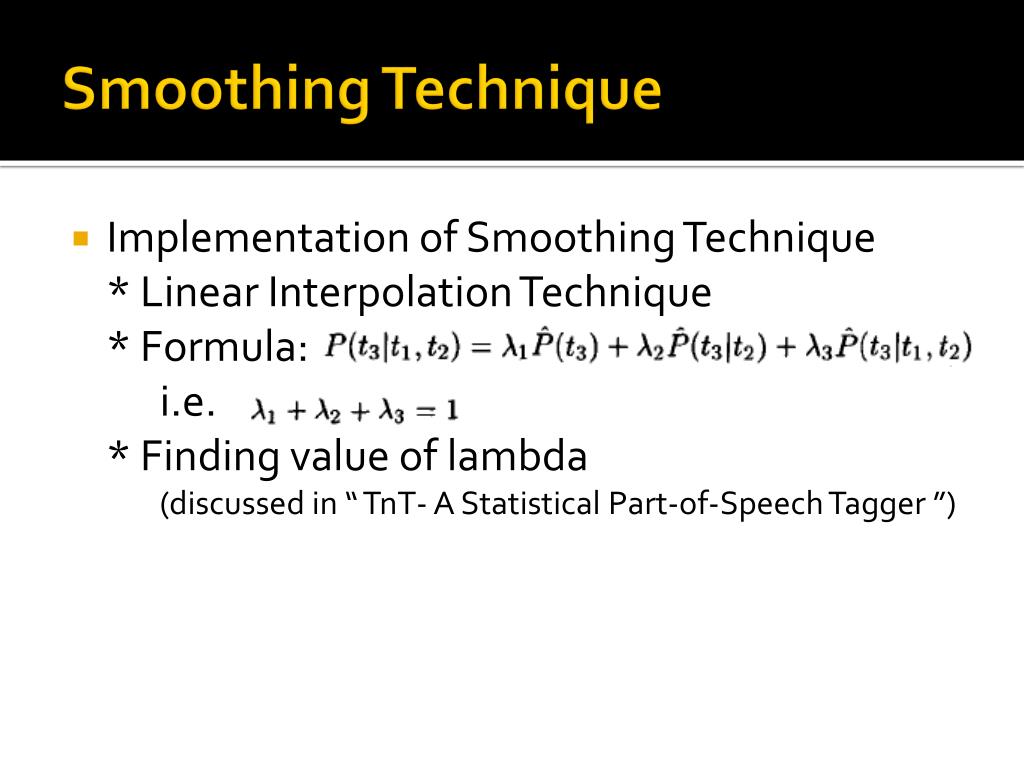

Smoothing is the process of removing random variations that appear as coarseness in a plot of raw time series data. The one assumption that is. Smoothing is the process of flattening a probability distribution implied by a language model so that all reasonable word sequences can occur with some probability.

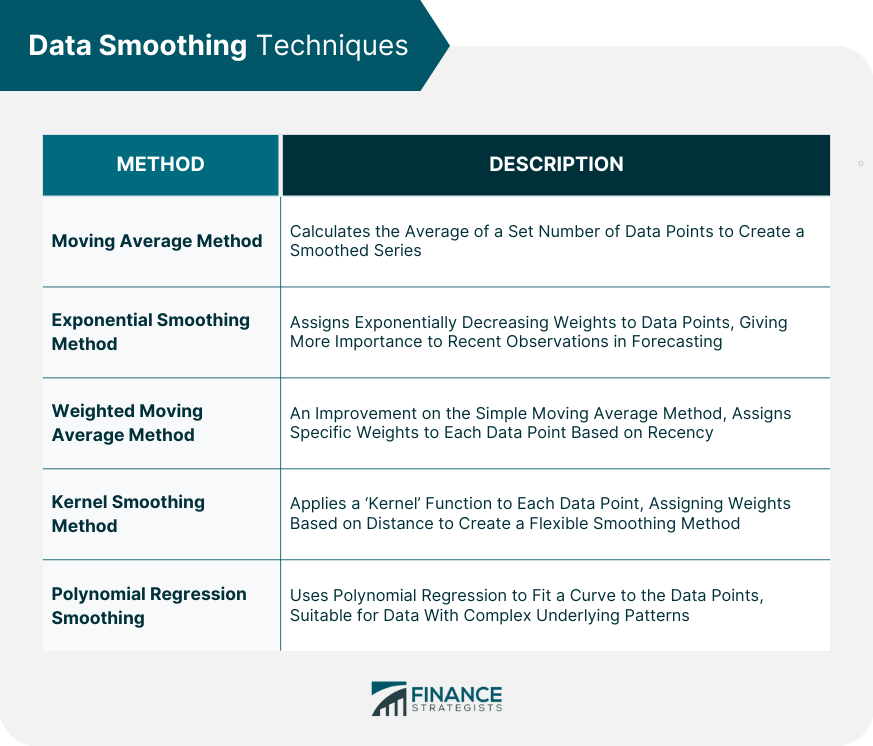

Smoothing can be achieved through a range of different techniques, including the use of the average function and the exponential smoothing formula. A classification model is calibrated if its predicted probabilities of outcomes reflect their accuracy. Each bin value is then replaced by the closest boundary value.

Robustness remains a paramount concern in deep reinforcement learning (drl), with randomized smoothing emerging as a key technique for enhancing this attribute. This often involves broadening the distribution by redistributing weight from high probability regions to zero probability regions. Smoothing is a very powerful technique used all across data analysis.

Smoothing techniques are kinds of data preprocessing techniques to remove noise from a data set. In smoothing, the data points of a signal are modified so individual points higher than the adjacent points (presumably. Other names given to this technique are curve fitting and low pass filtering.

The random method, simple moving average, random walk, simple exponential, and exponential moving average are some of the methods used for data smoothing. The increasing pattern will continue until the first executions reach their “smoothing end date” and disappear from. In smoothing by bin boundaries, the minimum and maximum values in a given bin are identified as the bin boundaries.

It is designed to detect trends in the presence of noisy data in cases in which the shape of the trend is unknown. The most basic estimation technique: Sort the array of a.

There exist methods for reducing of canceling the effect due to random variation. This results in an output that reveals underlying trends, patterns, or cyclical components more clearly, thereby improving the quality of the data for subsequent analysis. Analytic solver data science features four different smoothing techniques:

Edited dec 14, 2020 at 5:26. Each execution of a scheduled workload will accumulate over and over again during a period of at least 24 hours. In image processing, a gaussian blur (also known as gaussian smoothing) is the result of blurring an image by a gaussian function (named after mathematician and scientist carl friedrich gauss ).